A Tale of Two Meltdowns

In a more leisurely bygone era, banks went into insolvency when borrowers didn’t pay back their loans held by the bank. But in today’s world, it is the complexity of the assets the bank holds that can send them over the edge, not the loans they hold. The infamous Lehman Brothers bankruptcy in 2008 is a prima facie case in point. The bank fell into an ultimately fatal liquidity crisis because they could not prove to the market that its assets were solid. In short, Lehman’s didn’t have a clear enough picture of how sound their assets were for the simple reason that they didn’t have a clue as to how to value and rate the risk of those assets. Basically, the assets were out of contact with economic fundamentals and took on a life of their own.

Complexity is the culprit here. When bank assets are so complex that nobody inside or outside the bank can understand them, investors refuse to supply cash to pump-up the bank’s liquidity. As a result, banks hold-on to the money they do have and stop loaning it to customers. But when the credit markets freeze, so does a capitalist economy since the lifeblood of such an economy is a reliable, ongoing supply of credit.

The problem here is that the entire financial system has become far too complex to be sustained. There is a case to be made that we have reached a state of institutional complexity that is impossible to simplify, short of a total collapse. The world’s biggest banks need to become simpler, a lot simpler. But it’s almost impossible for a complex, bureaucratic and publicly-traded organization like Citibank or UBS to voluntarily downsize. The end result as we are now painfully aware is a “meltdown” of the entire financial system. To fix the idea of a complexity overload leading to a crash of one sort or another, let’s look quickly at another meltdown, this time on one that’s literal, not metaphorical.

On March 3, 2011 an unprecedented earthquake rocked the seabed off the northeast coast of Japan, creating a tsunami that swamped the protective systems put in place to protect the nuclear power reactors at the Fukushima plant. This led to massive amounts of radiation spewing forth from the reactors that caused hundreds of deaths, billions of dollars of property damage and untold amounts of human misery, not to mention social discontent in Japan that threatens to topple social structures that have been in place since the end of the Second World War.

The ultimate cause of this social discontent is a “design basis accident,” in which the tsunami created by the earthquake overflowed the retaining walls designed to keep seawater out of the reactor. The overflow damaged backup electrical generators intended to supply emergency power for pumping water to cool the reactors nuclear fuel rods. This was a two-fold problem: First, the designers planned the height of the walls for a magnitude 8.3 quake, the largest that Japan had previously experienced, not considering that a quake might someday exceed that level. What’s even worse, the generators were located on low ground where any overflow would short them out. And not only this.

Some reports claimed that the quake itself actually lowered ground level by two feet, further exacerbating the problem. So everything hinged on the retaining walls doing their job — which they didn’t! This is a case of too little complexity in the control system (the combination of the height of the wall and the generator location) being overwhelmed by too much complexity in the system to be controlled (the magnitude of the tsunami).

It’s clear from these two examples that an overdose of complexity can be bad for a society’s health. But where, exactly, does this complexity mismatch enter into the way human social processes take place? To answer that question, we have to look a bit deeper into the overall pattern by which events unfold.

Trends and Transitions

At a random moment in time, the generic behavior of any social system is to be in a trending pattern. In other words, if you ask how will things look tomorrow, the answer is that they will be just a bit better or a bit worse than today, depending on whether the trend at the moment is moving up or down. This is what makes trend-following so appealing: It’s easy and it’s almost always right — except when it isn’t. Those moments when a trend turns are rare (infinitesimally small in the set of all time points, actually) and usually surprising within the context of the situation in which the question about the future arises. These rare events are called critical points, the moments where the system is rolling over from one trend to another. If that rolling over process involves great social damage in lives, dollars and/or existential angst, we call the transition from the current trend to the new one an X-event. In the natural sciences, especially physics, such a transition is often associated with a “flip” from one phase of matter to another, as with the transition from water to ice or to steam. Here we focus just on human-caused X-events, and do not those like hurricanes and earthquakes that nature throws our way.

An obvious, but crucial, question arises from the above scenario: Can we predict where the critical points will occur? In situations where you have a large database of past observations about the process and/or a dynamical model that you believe in for the system unfolds in time, then you can sometimes use tools of probability and statistics and/or dynamical system theory to identify these points. Such cases often occur in the natural sciences. But they almost never occur in the social realm. In human processes, we generally have too little data and/or no believable model, at least no data or model for the kinds of “shocks” that can send humankind back to a pre-industrial way of life overnight. In short, in such situations we are dealing with “unknown unknowns.”

In this X-events regime, we cannot pinpoint where the critical points will be. This is due to the fact that events, X- or otherwise, are always a combination of context and a random trigger that picks out a particular event from the spectrum of potential events that the context creates. In other words, at any given time the context, which is always dynamically changing, allows for a variety of possible events that might be realized. The one event that is in fact actually realized at the next moment in time is determined by a random “shove” that sends the system into one attractor domain from among the set of possibilities. Since by its very nature a random trigger has no pattern, it cannot be forecast. Hence, the specific event that turns up cannot be forecast with precision either. Note that this does not mean that every possibility is equally likely. It simply means that while some potential events are more likely to be seen than others, the random factor may step-in to produce a realized event that is a priori unlikely.

The problem with speaking here about “likelihoods” is that this terminology is tied up with the tacit assumption that there is a probability distribution that we know, or at least in principle can discover, and can be used to calculate the relative likelihood of seeing any of the possible events. But when it comes to the X-events regime, where there is neither data nor a model, this assumption is simply wrong. There may indeed exist such a probability distribution. But if so, it lives in some platonic universe beyond space and time, not in the universe we actually inhabit. So what to do? How do we characterize and measure risk in an environment in which probability theory, statistics, and dynamical system theory cannot be effectively employed?

Complexity Gaps and Social Mood

As all human systems are in fact a combination of two or more systems in interaction, not a single system in isolation, a possible answer to the question of how to characterize the risk of an X-event is to identify the level of complexity overload, or “complexity gap,” that emerges between the systems in interaction. The size of that gap serves to measure how close you are to a system collapse (note: Here the term“complexity” refers to the number of independent actions available to the system at a given time. In general, the greater the number of such actions, the greater the complexity).

To make the story as simple as possible (but not simpler, to paraphrase Einstein’s famous remark), let me here focus on the simplest case in which we have two systems in interaction, such as in the financial meltdown example discussed earlier. In that case, the two systems are the financial services sector and the government regulators. These systems each have their own level of complexity, usually associated in some way with the number of degrees of freedom the system has to take independent actions at a given time. This complexity level is continually changing over the course of time as possibilities for action come and go, so that the complexity difference between the two systems also rises and falls in a dynamic fashion. This continually changing difference gives rise to what I call a “complexity gap” between the two systems. As long as this gap doesn’t get too big, everything is fine and the systems live comfortably in some degree of harmonic balance. But a widening of the gap generates a stress between the systems. And if the gap grows beyond a critical level, one or both of the systems“crashes.” In the financial example, the only way to have avoided the X-event would have been to narrow the gap by either increasing the complexity of the regulator or reducing the complexity of the financial sector. But human experience shows that voluntary downsizing almost never happens, nor does “upsizing.” So a systemic crash is what can be expected, and in fact is what actually took place.

To graphically illustrate this idea, think of stretching a rubber band with the two ends of the band representing the banks and the regulators. The length of the band measures the difference in complexity between the two, the complexity gap as it were. As the band is stretched, the gap increases and you can actually feel the tension in your muscles as the gap increases. As you keep pulling, the gap reaches the limits of elasticity of the rubber band and the band snaps. In other words, the system “crashes” in what we see as an X-event.

While it’s not possible to pinpoint the precise point at which any particular rubber band will actually break, it certainly is possible to know when you’re pushing the boundary of elasticity just by feeling the tension in your muscles as you continue pulling the two ends of the band apart. This increased tension is a way of anticipating when the system is entering the danger zone where a collapse is imminent.

At the critical point when the system is poised to crash, all it takes to shove it over the edge into a new state is an intrinsically unpredictable random push or pull on the system in one direction or another. Thus, where the system actually ends up after this X-event has taken place is inherently unpredictable. For human systems, the overall “social mood” of the population within which the system exists has a strong biasing effect on what does or does not emerge as the “winner” from among the set of possible outcomes of the crash.

In essence, the social mood, along with the complexity gap, is the force driving the change in context that ultimately combines with the random catalyst to create what actually occurs. A good metaphor to keep in mind here is that the entire process is much like weather forecasting. Measurements of temperature, wind velocities, humidity and the like on a given day circumscribe the set of physical possibilities for the weather tomorrow. But what actually turns up when you look out the window is fixed by the flapping wings of that famous butterfly in the Brazilian rain forest that triggers the tornado in Miami today. So, again, fortune’s formula is Context + Randomness = Event.

A final question regarding this transition from trends to critical points to X-events and beyond is, What properties enable some systems to survive and possibly even prosper (i.e., be “reborn”) in the new environment society faces after the X-event has run its course? The answer to this central question of long-range planning is tied-up with the notion of system resilience.

Resilience and Creative Destruction

Contrary to popular belief, the notion of a system’s “resilience” is not just jargon terminology for expressing the notion of stability. System resilience is a much deeper and more subtle concept than just the ability of the system to return to its former structure and mode of operation following a major shock. In point of fact, returning to “doing what you were doing before” is never possible. The world moves on and you either move with it or you go out of business. So any idea of “stability,” at least as that term is used in both mathematics and everyday life, is a non-starter. But resilience is not about remaining stable in the face of uncertain disturbances (usually thought to be from outside the system itself, but not necessarily). Rather, it is about being able to adapt to changing circumstances while maintaining the ability to continue performing a productive function so as to avoid extinction. Sometimes that function involves doing what you were doing earlier, but now doing it in a different manner to facilitate survival in the new environment. But more often it means changing the very nature of your business to fit more comfortably into the new environment. In short, a resilient system not only survives the initial shock but can actually benefit from it.

Note also that the notion of resilience is not absolute; a system may be resilient to a particular shock, say a financial crisis, but totally vulnerable to collapse from another shock like an Internet failure. So, in fact, resilience is a “package deal,” in the sense that it is totally intertwined with a particular shock. Thus, we cannot say that a system is resilient without specifying, or at least tacitly understanding, the shock that it is able to resist.

A reasonably compact list of a resilient system are the “3A’s”: Assimilation, Agility and Adaptability. A resilient system must be able to absorb or “assimilate” a shock and continue to function. It should also be “agile” enough to change its mode of behavior when faced with new circumstances created by the shock. And, finally, the resilient system should be ready to “adapt” to that changed environment if an adaptation will serve to enhance the system’s survivability. Note here that agility and adaptability are two different things. The first refers to the system’s ability to recognize changed circumstances, while adaptability means that the system is willing and ready to act upon that recognition so as to actually benefit from the shock, not simply survive it.

The idea of benefiting from an X-event raises an important question of time scale. When an X-event occurs, almost without fail it’s seen as something negative, an occurrence to be avoided, since it usually involves a major change of the status quo. And that’s just the kind of change most people seem to like least. So in the short-term, the X-event is regarded as a disaster of one type or another. A good example is the meltdown of the Japanese nuclear power plant at Fukushima that we discussed earlier. About one year after that event, I was giving a series of lectures in Tokyo and found just about everyone in the audiences I addressed still in a state of shock over that X-event. Many were almost equally shocked when I said that if I went to sleep today and woke up in ten years, I’d be willing to bet that the majority of the people in the room would tell me that Fukushima was not the worst thing that ever happened to Japan; rather, they would say it was the best thing that ever happened! Why? Simply because that mega-X-event opened up a huge number of degrees of freedom for action in the social, political, economic and technology sectors in Japan that could never have been created by gradual, evolutionary change. It took a Fukushima-level revolutionary change to blast the country out of an orbit that they had been stuck in for the last 30 years. Only a major shock of the Fukushima type could shake up the relationship between society, government and industry needed to give the country an opportunity to be “reborn.”

My assertion to the Japanese is a variant of the famous idea of “creative destruction” originally proposed by the Austrian-American economist Joseph Schumpeter in the 1930s. The sorts of X-events described here must take place in order for “eco-niches” to be created in the social landscape for new ideas, new products, and new ways of doing business to emerge. The dinosaurs were not resilient enough to survive the Yucatan asteroid impact 65 million years ago, which opened up the niches for today’s humans to emerge. The point here is that we should not regard X-events as something to be avoided; that cannot happen, anyway. Rather, they should be seen as being as much an opportunity as a problem. In the short-term, they are a problem; in the longer-term perspective, they are an opportunity. The resilient organization will recognize this duality and take steps today to capitalize on it.

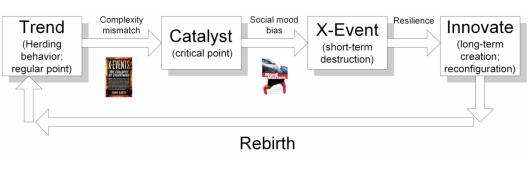

“Many of the basic concepts discussed in this paper are present in the diagram below. These include social mood/complexity gaps (first arrow), critical points (second box) and the overall notion of system resilience (last arrow and boxes 3 and 4).”

References

Casti, J. X-Events: The Collapse of Everything. Harper Collins/Morrow, NY, 2012.

Casti, J. Mood Matters: From Rising Skirt Lengths to the Collapse of Global Powers. Copernicus, NY, 2010.

Facebook

Facebook Twitter

Twitter